Is AI in Healthcare Ethical?

Artificial Intelligence in Healthcare & Massage Therapy

AI is quietly and quickly becoming a healthcare assistant. There are new AI charting scribe tools, ways for AI to flag and summarize intake forms, and even suggest treatment plans. But behind the convenience lies some interesting questions:

Is private healthcare information safe?

What is the environmental impact of using AI?

If AI makes a mistake, who is accountable?

To use AI in a way that respects the relationships we’ve worked so hard to build, we need to consider its impact.

Patient Privacy and Data Collection

When AI is used in healthcare, it’s often processing sensitive personal health information. In both the U.S. and Canada, this is governed by strict privacy laws such as HIPAA and PIPEDA.

Both laws aim to ensure that information is collected, stored, and shared securely, and that patients’ rights to privacy are respected.

Even if an AI vendor claims to be compliant, healthcare providers are still ultimately responsible for ensuring:

Data is encrypted during transmission and at rest

Access is limited to authorized personnel

There is a clear agreement in place outlining how the AI vendor will handle, store, and delete information

From an ethical standpoint, patients should know when AI is part of their care. This allows them to make an informed decision about whether they are comfortable with that process.

One way to reduce PIPEDA-related risks is to limit what information the AI receives. If an AI tool is used for general purposes without including names, birth dates, or other identifiable health details, the interaction may fall outside the scope of PIPEDA’s strictest requirements. By keeping AI input free of personal identifiers, you can still benefit from its capabilities while sidestepping many compliance concerns.

Environmental Impact of AI in Healthcare

Running AI models requires massive amounts of computing power. When healthcare providers use AI they indirectly contribute to high water and electricity demand. While any single clinic’s impact may be small, the collective demand is substantial.

Some large AI systems can use millions of liters of water each year to keep servers at safe operating temperatures. This can strain local water resources.

AI models also require substantial electricity. If the electricity is sourced from fossil fuels, AI usage contributes more heavily to greenhouse gas emissions.

Mitigating the Environmental Impact

Healthcare organizations can take steps to reduce AI’s environmental toll such as choosing vendors committed to sustainability, avoiding unnecessary queries, and advocating for greener infrastructure.

Bias and Fairness

AI learns from data and if that data is incomplete or skewed, the results can be biased. In healthcare, this might mean less accurate diagnoses for certain age groups, genders, or ethnicities. To reduce bias, choose tools trained on diverse datasets and keep human review in place.

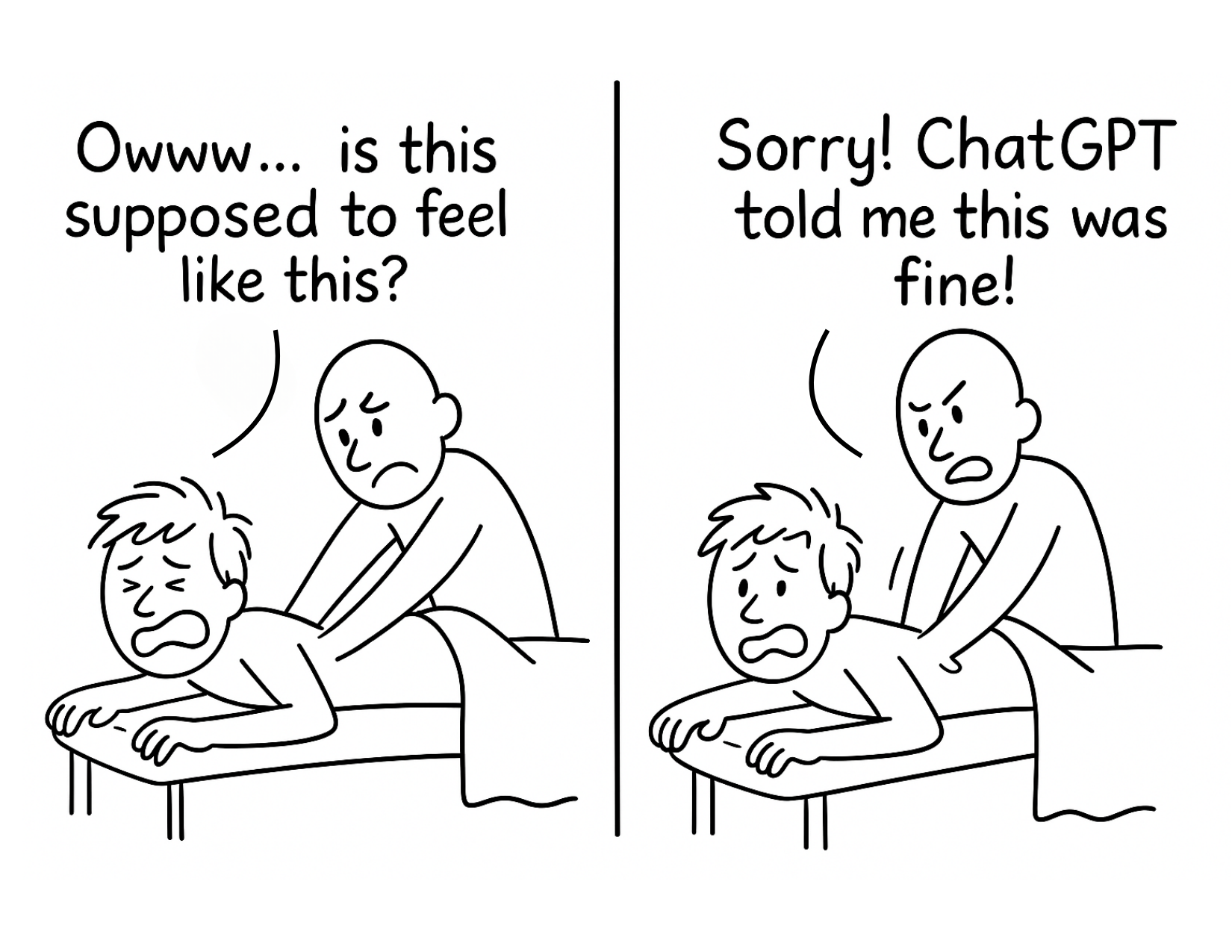

Accountability

When AI leads to an error such as unsafe treatment suggestions, the question of accountability becomes complicated. Is it the healthcare provider, the AI vendor, or both? Current legal frameworks often lag behind the technology, leaving providers in a gray area. Proceed with caution and use proper judgment.

The funny thing is I used AI to help render this image and I don’t know why the hands are missing! See, it makes mistakes!

Human Judgment

AI can speed up tasks and support decision-making, but it shouldn’t replace human judgment. Use AI as a recommendation, not a final answer. Ethical care comes from blending technology’s efficiency with human expertise and empathy.

Check out our research course below! One of the articles we highlight features massage and robotics!